While animating a camera, it is often desirable to quickly set the focus on a specific object. The “Focus on Object” field in Blender is incredibly useful for this, allowing the quick selection of a focus object via the eyedropper tool. However, once this link is set, it remains permanent. If the link is severed, the focus on the specified object is lost. I sought a solution that would allow me to capture the correct focus distance based on the focus object before severing the link. This led me to develop a script that not only calculates but also sets the “Focus Distance” field automatically to the appropriate value. This script triggers when the focus object property changes from empty to populated. It then calculates the distance, logs it in the console, and sets the focus distance. Now, you can break the link to the focus object while retaining the focus based on the last calculated distance.

How to Use the Script:

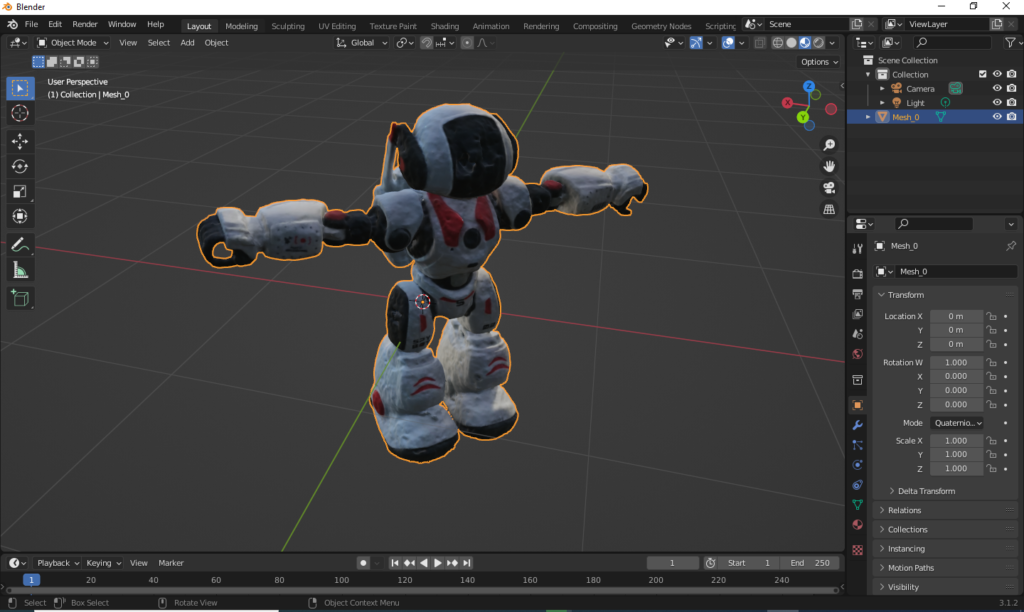

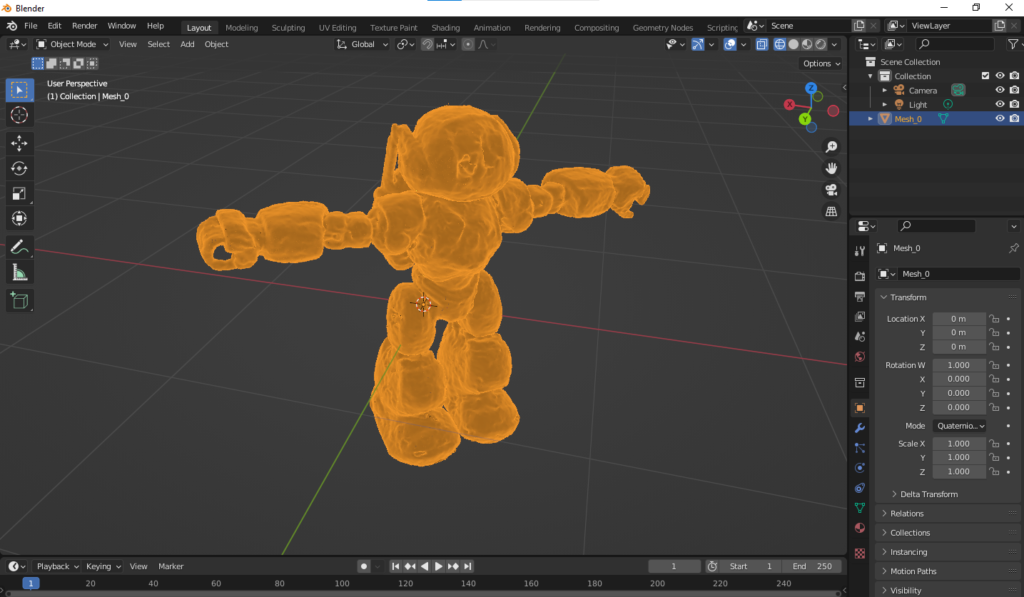

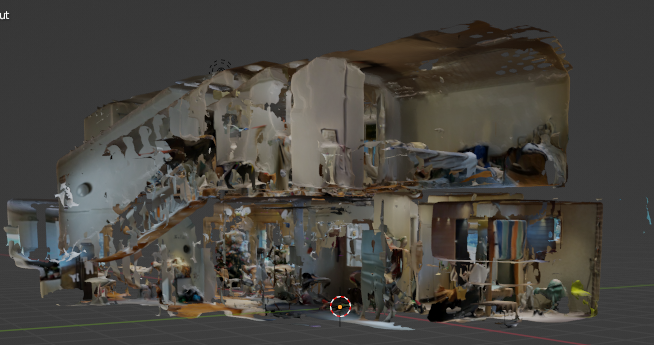

- Open Blender and Set Up Your Scene: Ensure your scene contains at least one camera and other objects you might want to focus on.

- Open the Scripting Tab: Navigate to the ‘Scripting’ tab in Blender to access the Python console and scripting area.

- Paste the Script: Copy the script provided below and paste it into a new text editor within the Scripting tab.

- Run the Script: After pasting, run the script by pressing the ‘Run Script’ button. This will activate the script, and it will start monitoring changes to the focus object property.

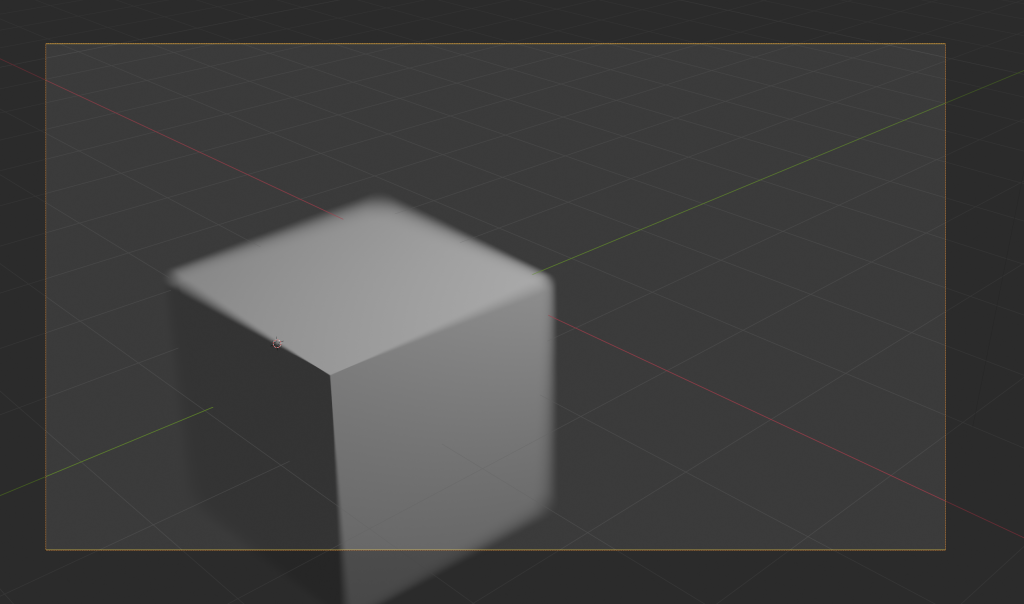

- Use the Camera’s Focus Object Field: Go to your camera’s object data properties and under the depth of field settings, use the eyedropper to select a focus object from your scene. The script will automatically update the “Focus Distance” to match the distance to the selected object.

- View the Output: Check the console (located in the same Scripting tab) to see the printed focus distance. This value is also automatically set in the camera’s “Focus Distance” field.

- Sever the Link If Desired: You can now sever the link to the focus object without losing the focus distance, as it has been set manually based on the last calculation.

- Here is the script:

import bpy

# Global variable to keep track of the last focus object

last_focus_object = None

def update_focus_distance_if_needed(scene):

global last_focus_object

camera = scene.camera

if camera and camera.data.dof.use_dof:

current_focus_object = camera.data.dof.focus_object

# Check if the focus object was None and now is set

if last_focus_object is None and current_focus_object is not None:

# Calculate and update the focus distance

camera_location = camera.matrix_world.translation

target_location = current_focus_object.matrix_world.translation

focus_distance = (target_location - camera_location).length

camera.data.dof.focus_distance = focus_distance

print(f"Focus distance updated to: {focus_distance}")

# Update the last known focus object

last_focus_object = current_focus_object

def register_handlers():

global last_focus_object

# Initialize last focus object

camera = bpy.context.scene.camera

if camera and camera.data.dof.use_dof:

last_focus_object = camera.data.dof.focus_object

# Add handler

bpy.app.handlers.depsgraph_update_pre.append(update_focus_distance_if_needed)

print("Handler registered to monitor focus object changes.")

register_handlers()